Multidevice Preview: For when two (or more) cameras are better than one¶

We learn by doing, which is why we’re seeing a growing trend for companies to use XR to simulate a real-world scenario for their employees to train in. Add hand tracking to this and users feel fully immersed and can even gain muscle memory.

These virtual training environments are perfect for controller-free hand tracking because controllers immediately remove the user from what they would be doing in the real world. Hand tracking is the only viable solution for imitating real life.

The problem is that typically your hands can’t go too far outside the field of view of the headset - tracking might be lost and the immersion would be broken.

For developers building Unity projects that include hand tracking, Ultraleap has solved this problem with “Multidevice”, now available in our Unity Plugin as a Preview.

With Multidevice you can now add extra cameras into training simulator environments to cover a larger tracked area and increase the visibility of the hands. Multidevice merges data from multiple cameras to provide seamless, continuous, reliable hand tracking at the edge of the user’s reach and beyond the headset field of view.

This means that if the user’s hands are away from the headset, or are at the user’s side, hand tracking is not lost and the immersion is unbroken. As a result, the simulation can be a more reliable gauge of whether an individual can successfully perform the task at hand.

Example scene¶

We have provided a simple example scene, Multidevice Aggregation (VR), which can be found by going to the Unity Package Manager and importing the Ultraleap Tracking Preview Examples.

This example scene has been built to offer developers with a way to understand how a Unity app can simultaneously receive and interpret hand data being received from multiple cameras, positioned around a user. It is not use case specific so just exists to demonstrate how hand tracking can continue to function, even though the user’s hands are outside of the view of the Ultraleap camera mounted to the front of the headset.

However, to help illustrate how Multidevice can be used specifically for pilot training, we have created videos which run through the setup, and then show it working:

This video shows a basic pilot cockpit setup in Unity, outlining how to configure the scene relative to the multiple cameras you are going to use

This video shows the basic scene in action - driven by hands being tracked by a camera attached to a headset, and a camera in desktop position

Looking to use Multidevice in your Unity project? Read on…

What’s included?¶

Compatibility with Windows systems running Ultraleap’s hand tracking software 5.6 or newer

Capacity to integrate multiple Ultraleap tracking cameras, connected to a single Windows PC

A way for a developer to build a Unity app (Unreal support coming soon) that can receive and interpret hand data being generated by a single user’s hand(s), which is receiving data from multiple cameras simultaneously

Capability for individual cameras to be used in different orientations, according to the need (e.g. one attached to a VR headset in HMD orientation, one in Desktop orientation, and one in Screentop orientation), with an interface made available via the Control Panel

Getting Started¶

Requirements¶

Ultraleap Hand Tracking Cameras (standalone devices - not integrated into headsets)

Intel® Core™ i3 processor 5th Gen

2 GB RAM

USB 3.0 cables

Windows® 10, 64-bit

Ultraleap Hand Tracking Software (V5.6+)

Unity 2020LTS (or newer) project

Ultraleap Tracking Preview package for Unity 5.9.0 (or newer)

The Ultraleap Unity Plugin is subject to the Ultraleap Tracking SDK Agreement. The Unity Plugin is also available on GitHub and can be used with MRTK-Unity.

Attach your Ultraleap Hand Tracking Cameras to your PC:

Make sure you use USB 3.0 cables. You can run as many devices as you have USB bandwidth and CPU power. We recommend limiting your usage to 1 device per 2 CPU cores.

Note

USB hubs will run at the rate of the slowest device attached, including any USB 2.0 cables

Section 1 tells you how to set up hand data for each device, Section 2 then guides you through combining that data. Section 3 explains how to locate the tracking devices.

Note

Make sure the Ultraleap Tracking Preview package for Unity 5.9.0 (or newer) is imported in your Unity 2020LTS (or newer) project.

Section 1: Setting up a Unity scene with one set of hand data per Tracking Device¶

In a Scene in Unity, make sure you have one Leap Service Provider per tracking device in the scene

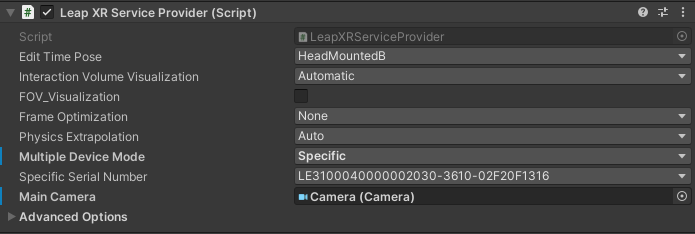

For each Leap Service Provider, select Specific under ‘Multiple Device Mode’

Connect each Ultraleap Hand Tracking Camera to your computer via the USB cable one at a time and select the corresponding Specific Serial Number on the Leap Service Provider

With all devices connected, you are ready to link hands to the Leap Service Providers. By default you will receive one set of hand data per tracking device. While this may be useful, most scenarios require one set of tracking data, exposed as a LeapProvider data source (so it can be used elsewhere in your scene), where the underlying data comes from multiple tracking devices. This is where aggregation providers come in.

Section 2: Combining tracking data to produce one aggregated set of hand data across all connected devices¶

Make sure the steps in Section 1 have been completed

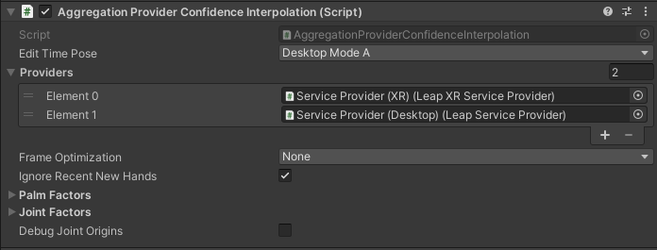

Add a new object to your Scene with the

AggregationProviderConfidenceInterpolation.cscomponentReference each of your Leap Service Providers in the Providers list on the

AggregationProviderConfidenceInterpolation.cscomponentAdditional settings can be adjusted and are explained here

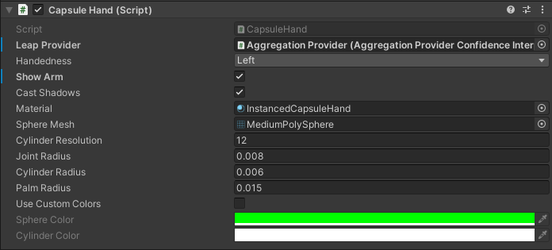

You can now reference the AggregationProviderConfidenceInterpolation.cs component to your hand models, replacing any existing Leap Service Provider references as follows:

The AggregationProviderConfidenceInterpolation.cs component is one way of aggregating data from multiple tracking devices. However, it is possible you want to write your own aggregator to change which factors are considered important in preferring one hand tracking device (data source) over another. We’ve provided an abstract class, LeapAggregatedProviderBase.cs, that provides a useful template for creating a new aggregator. This takes a collection of leap service providers (one per tracking device) with the data being aggregated through overriding MergeFrames in the derived implementation.

Section 3: Automatically position the Leap Service Providers in the scene¶

For most aggregation scenarios, we need to know the relative location of the tracking devices to be able to aggregate the data - i.e. the hand tracking data needs to be transformed to a common coordinate system. This could be done by setting up the relative transforms of the leap service providers in the scene to accurately match the real-world locations. However, sometimes we might not know this information accurately, or even at all. In this case, we can use an alignment script to estimate the relative locations of the tracking devices from the hand tracking data.

When using aggregation the Leap Service Providers are automatically positioned in the scene. There is no need to manually position them:

Make sure the steps from Sections 1 and 2 have been completed

Import the Ultraleap Tracking Preview Examples package for Unity

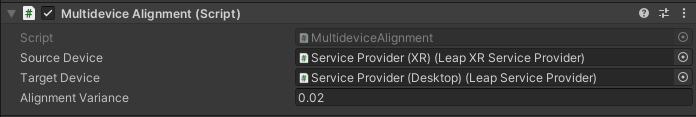

Add one

MultideviceAlignment.cscomponent to an object in the scene for each device you wish to align to a source device. For example, when using a device in HMD mode, you can use that device as a source for each additional Desktop or Screentop devicePopulate the references of the

MultideviceAlignment.cscomponents to complete this section

Feedback¶

As this is an early preview, we would love all your feedback (both good and bad!). Please reach out to us on Discord or via our GitHub Discussions.

Whilst this beta build does not support Ultraleap hand tracking cameras integrated into headsets, we are looking for customers to help us understand how well this feature solves their use case. The feedback that we receive will then help inform any work we do for integrated headsets.